Ever had one of those days where everything’s going great, and then BAM! Your organic traffic drops overnight? That happened to me.

I logged in, expecting smooth sailing, only to find my site had been hacked with thousands of spammy Japanese text pages indexed, leading to a massive loss in rankings and a flood of junk content in Google search results.

Recovering was challenging, but with patience and a systematic approach, I restored my site and regained traffic. Here’s everything I did—and what you can do too—to bounce back from the Japanese keyword hack.

What is the Japanese Keyword Hack?

For those who haven’t encountered it, the Japanese Keyword Hack is a nightmare for any website owner. It’s a type of SEO spam attack where hackers inject your site with pages filled with random Japanese text, usually promoting suspicious products, fake stores, or questionable websites. Here’s how it typically works:

- Exploiting Vulnerabilities: Hackers exploit vulnerabilities in your site, such as outdated plugins, weak security settings, or unmonitored accounts, to inject malicious files.

- Hijacking Your Google Search Console: They often gain access to your Google Search Console, which allows them to manipulate your site’s indexing. They’ll upload sitemaps filled with spam pages to ensure they’re quickly indexed.

- Spamming Search Results: These pages are designed to be found in Google, which can damage your rankings, increase bounce rates, and lead to potential security warnings for visitors.

My Experience: The Initial Shock

I noticed the problem when my traffic dropped drastically overnight. After a quick check, I found my Google Search Console flooded with thousands of pages with Japanese keywords.

For a while, I convinced myself it was just a glitch, refreshing pages repeatedly, but it quickly hit me—my site had been hacked. A deeper look revealed that the hacker even added a sitemap full of spam pages to my Search Console!

How Did It Happen?

After some digging, I traced the issue to an old laptop I’d given to my sister without logging out of my accounts. She’d downloaded a movie streaming app that turned out to be malicious, snagging my login credentials, including access to my Google Search Console. This was my first major lesson in account security—always log out when you’re not in control of a device!

Step 1: Securing Accounts and Changing Passwords

Once I realized my accounts were compromised, I knew I had to act fast:

- Change All Passwords: I changed every password, from my web host to Google accounts, ensuring that nothing could be accessed by the hacker. Since I had over 200 accounts stored, this was a tedious but essential step.

- Enable Two-Factor Authentication (2FA): On all critical accounts, I enabled 2FA to add an extra security layer, making it much harder for anyone to access my accounts without my phone.

- Revoke Unused Logins: I revoked access for any unmonitored devices or old accounts that hadn’t been used in a while.

Tip: Use a password manager to securely store your passwords and to easily generate complex ones for each account.

Step 2: Restoring My Site from Backup

One of the smartest decisions I’d made was to keep regular backups of my site. I quickly restored my website to a previous version before the hack, which helped clean out the spammy pages and stopped further indexing of junk content. Restoring from a backup saved me a lot of time by quickly removing most malicious files.

Be Cautious with Robots.txt Blocking

Initially, I mistakenly blocked these new pages with a robots.txt file, assuming it would prevent them from being indexed again. However, I realized that blocking URLs with robots.txt can make it harder to deindex spam pages from Google, as blocked pages can still appear in search results.

Lesson Learned: Use robots.txt carefully. For recovery, focus on actively cleaning up malicious URLs instead of outright blocking them.

Step 3: Avoiding the URL Removal Tool Trap

At first, I thought Google Search Console’s URL Removal Tool would help. But after some digging, I learned that this tool only hides URLs temporarily—it doesn’t actually remove them from Google’s index. It’s also designed for URLs you no longer control, not for active cleanups. Instead, I found a much better alternative.

Using Google’s Outdated Content Tool

Instead of the URL Removal Tool, I found Google’s Outdated Content Tool to be much more effective. This tool allows you to request Google to recrawl pages and update them in the search results, removing any outdated content you’ve already deleted from your site. This proved essential in getting rid of remnants of spam pages lingering in the index.

How to Use It:

- Go to Google’s Outdated Content Tool.

- Submit the URL of the outdated or spam page.

- Google will recrawl and update its index to reflect the changes, effectively clearing out the hacked content from search results.

Using this tool, I was able to request Google to refresh its index based on the latest content on my site, which sped up the cleanup process significantly.

Tip

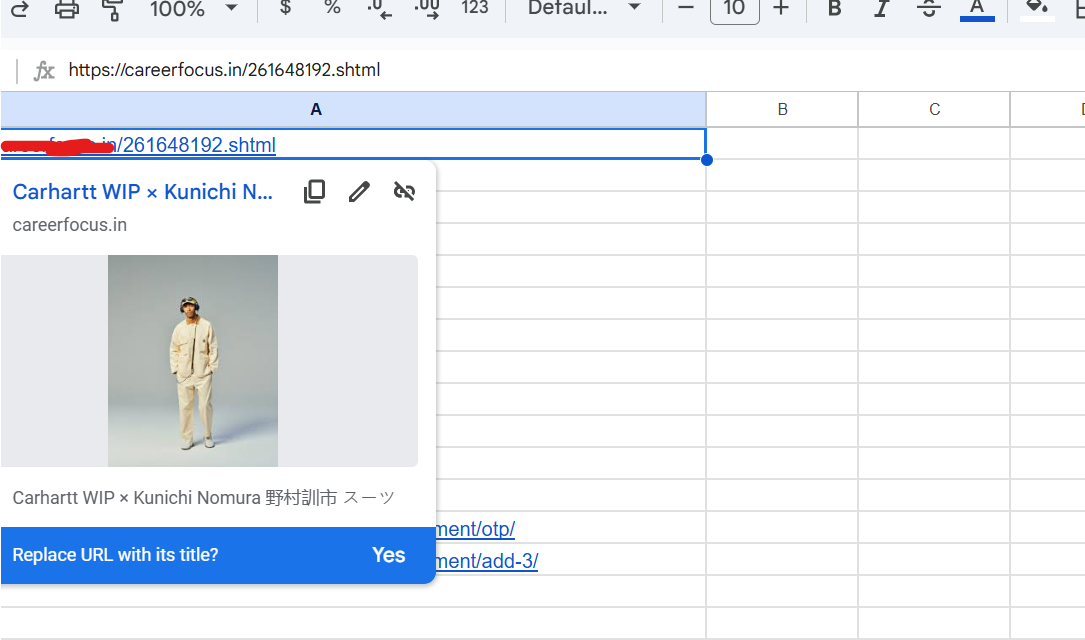

Using a Google Sheet made tracking the indexed spam URLs much easier. Here’s a quick trick I used:

- Organize URLs in Google Sheets: I listed each URL from the exported data into Google Sheets, keeping them neatly arranged.

- Check Index Status with a Hover: For each URL, I hovered the mouse over the link. If a thumbnail preview appeared, the page was still indexed in Google. If no thumbnail popped up, it meant the page had been successfully deindexed.

This small hack saved me hours of manual checks, making it simpler to see which pages still needed to be submitted to Google’s Outdated Content Removal Tool. This way, I could focus only on the pages that still needed action and track the overall progress more effectively.

Step 4: Creating a Temporary Sitemap for Faster Deindexing

To speed up the deindexing process, I created a temporary XML sitemap listing all the URLs of the spam pages. Submitting this sitemap in Google Search Console helped Google identify these spammy pages, helping them disappear faster from the index.

- Submit Only the Spam Pages: This step helps prevent Google from mistakenly re-indexing the pages you want gone.

- Remove the Temporary Sitemap Afterward: Once Google started recognizing and deindexing these pages, I deleted the temporary sitemap to avoid further confusion.

Step 5: Setting a 410 Status Code for Deleted Pages

After removing the spam pages from my server, I configured them to return a 410 HTTP status code instead of a 404. The 410 status code tells Google that these pages are “Gone” and not coming back, which speeds up the deindexing process.

Why 410 Instead of 404? A 404 indicates a missing page, but Google might check back later. A 410, however, signals that the page is permanently gone, prompting Google to deindex it faster.

Step 6: Building a Long-Term Recovery Plan

Recovering from a hack doesn’t end with cleanup; it requires ongoing efforts to ensure security and restore rankings:

- Update All Site Software: Keeping plugins, themes, and CMS software up to date reduces your vulnerability to future hacks.

- Run Regular Security Scans: Using a security plugin like Wordfence or Sucuri to scan for malware and vulnerabilities helps catch problems early.

- Monitor Google Search Console Alerts: Pay attention to any unusual activity or indexing issues. Catching them early can prevent massive spam indexing.

- Create Quality Content: As my site recovered, I doubled down on creating valuable, relevant content, which not only restored my rankings but also improved user engagement.

Final Thoughts: Lessons from the Japanese Keyword Hack

After months of effort, I finally saw my rankings return to their original state. Looking back, this experience taught me several valuable lessons about website security and crisis management:

- Backup Regularly: If I hadn’t had a recent backup, recovery would have been much harder.

- Use Strong, Unique Passwords with 2FA: Proper security measures would have prevented this attack.

- Patience is Key: Recovering from such a hack takes time. Results don’t come overnight.

- Stay Updated on Security: The web constantly evolves, and staying informed about security practices is essential.

Recovering from the Japanese keyword hack isn’t easy, but with the right actions, you can protect your site and restore its reputation. Learn from my mistakes—secure your accounts, stay vigilant, and keep your site’s software updated.